Networking and Content Delivery Overview

This section provides an in-depth exploration of three essential Amazon Web Services (AWS) for networking and content delivery: Amazon Virtual Private Cloud (Amazon VPC), Amazon Route 53, and Amazon CloudFront. Here we will cover the following core topics:

- Introduction to Networking Concepts

- OSI Model and Networking Layers

- Amazon Virtual Private Cloud (VPC)

- Networking in VPC:IP Address Allocation,Reserved IP Addresses in a Subnet,Public and Elastic IP Addresses,ENI,Internet Gateway and NAT Gateway

- VPC Sharing and Peering

- AWS Site-to-Site VPN and Direct Connect

- Security Measures within VPC

- Amazon Route 53

- Amazon CloudFront

Key Learning Outcomes

This module is designed to equip you with fundamental networking knowledge, focusing specifically on the AWS services mentioned. You will engage in practical activities such as labeling network diagrams and designing a basic VPC architecture. A recorded demonstration will guide you through using the VPC Wizard to create a VPC that includes both public and private subnets. Following the demonstration, you will have the opportunity to apply your learning in a hands-on lab by creating a VPC and launching a web server using the VPC Wizard. Finally, you will complete a knowledge check to reinforce your understanding of the key concepts discussed.

Understanding Networking

A computer network consists of two or more client devices connected to share resources. These networks can be logically divided into smaller subnets. The connection and communication between these devices are facilitated by networking equipment such as routers or switches.

Every device on a network is assigned a unique Internet Protocol (IP) address, which acts as an identifier. An IP address is a numerical label, typically represented in a decimal format, which is converted by machines into a binary format for processing.

For example, consider the IP address 203.0.113.10. This address comprises four decimal numbers separated by periods, each representing 8 bits in octal form. This means each of the four numbers can range from 0 to 255, collectively forming a 32-bit binary number, commonly known as an IPv4 address.

IPv6, on the other hand, is a more advanced version of IP addressing, offering 128-bit addresses. IPv6 addresses provide a much larger address space, making them capable of accommodating a greater number of devices. These addresses are structured into eight groups of four hexadecimal digits separated by colons. For instance, an IPv6 address could look like 2400:cb00:2049:1::a29f:1a2b.

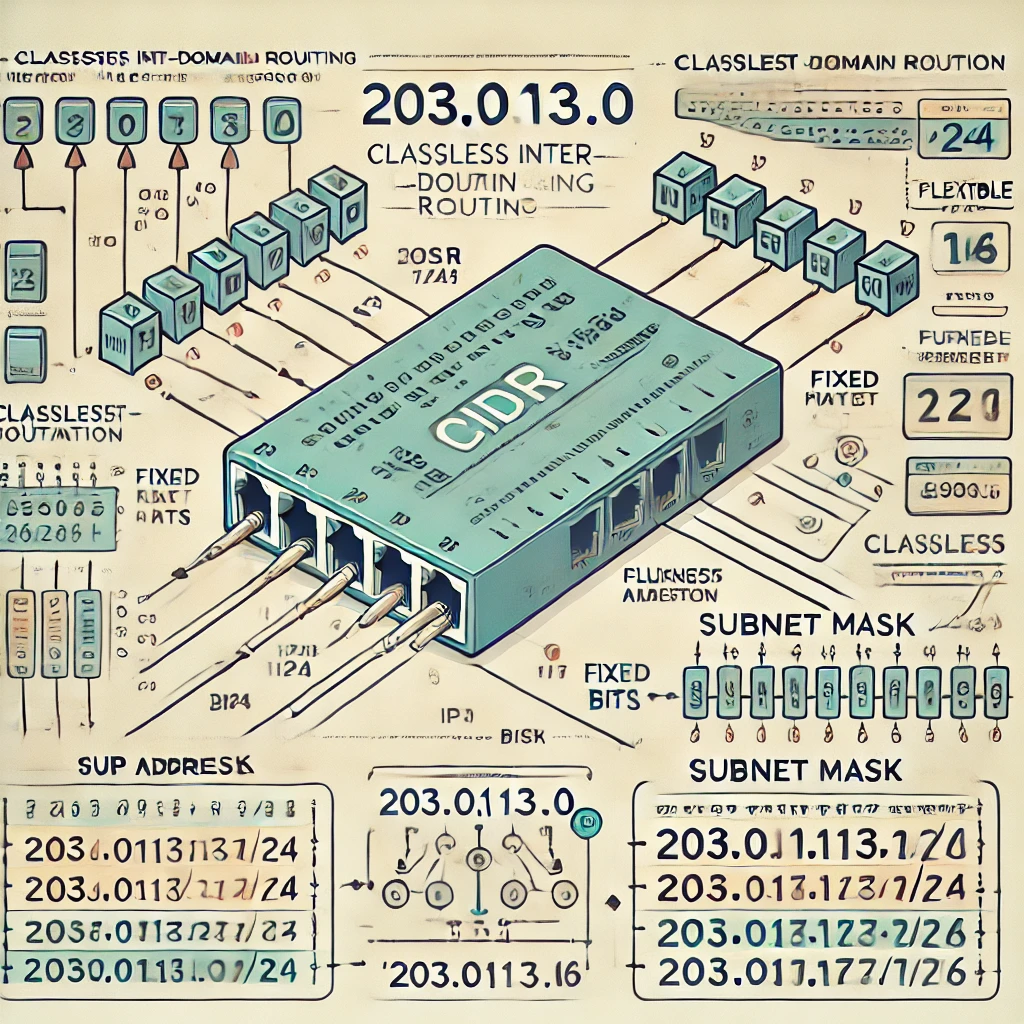

CIDR Notation and Network Addressing

Classless Inter-Domain Routing (CIDR) is a technique used to allocate IP addresses more efficiently. A CIDR notation includes:

- The IP address (representing the network’s starting point)

- A forward slash (/)

- A suffix indicating the number of fixed bits in the network portion of the address

The non-fixed bits in the address can vary, allowing for a range of IP addresses within a specific network. For instance, the CIDR address 203.0.113.0/24 specifies that the first 24 bits are fixed, leaving the remaining 8 bits flexible. This configuration allows for 256 unique IP addresses ranging from 203.0.113.0 to 203.0.113.255.

In cases where more addresses are needed, a CIDR such as 203.0.113.0/16 would fix the first 16 bits, allowing the remaining 16 bits to vary, providing 65,536 IP addresses within the range 203.0.0.0 to 203.0.255.255.

Special cases in CIDR include:

- Fixed IP Addresses: All bits are fixed, representing a single IP address (e.g., 203.0.113.10/32), useful for setting up firewall rules.

- The Internet: Represented as 0.0.0.0/0, indicating that all bits are flexible.

Here is the above figure, explaining CIDR Notation and Network Addressing. It illustrates how IP addresses are structured and how CIDR notation is used to create subnets. The visual breakdown includes examples like “203.0.113.0/24” and “203.0.113.0/16” and demonstrates the conversion from decimal to binary. This educational diagram is designed for clarity and accuracy

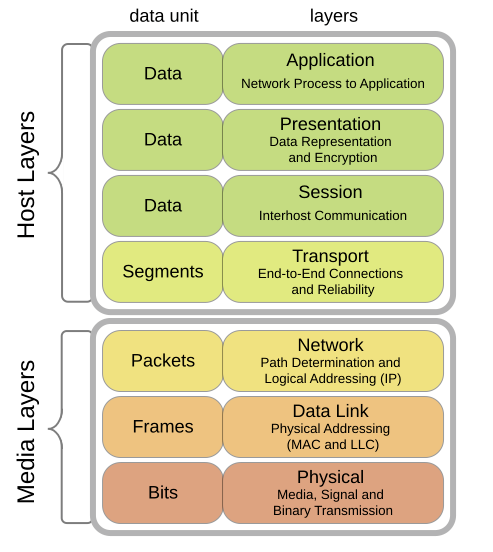

OSI Model and Networking Layers

The Open Systems Interconnection (OSI) model is a framework used to understand how data travels across a network. It breaks down the communication process into seven distinct layers, each responsible for different aspects of the transmission:

- Physical Layer: Involves the actual transmission and reception of raw data (bits) over a physical medium, such as cables.

- Data Link Layer: Manages data transfer between devices on the same local area network (LAN), using devices like hubs and switches.

- Network Layer: Handles routing and forwarding of data packets across different networks, typically involving routers.

- Transport Layer: Ensures reliable communication between hosts, utilizing protocols such as TCP and UDP.

- Session Layer: Manages sessions and controls the dialogues between two systems.

- Presentation Layer: Translates data between the network format and the application format, often dealing with encryption and compression.

- Application Layer: Provides the interface for the end-user to interact with network services, supporting protocols like HTTP, FTP, and DHCP.

Understanding the OSI model is crucial for grasping how communication occurs in a VPC, which will be explored further in subsequent sections of this module.

Introduction to Amazon VPC

Amazon Virtual Private Cloud (VPC) is a foundational service in AWS that allows you to set up a virtual network environment tailored to your specific requirements. A VPC is essentially a logically isolated segment of the AWS Cloud, within which you can deploy various AWS resources, such as EC2 instances, databases, and more. This isolated environment gives you complete control over your networking configurations, allowing you to define IP address ranges, create subnets, configure routing tables, and set up network gateways.

IP Address Management in Amazon VPC

One of the primary advantages of Amazon VPC is the ability to define your own IP address range, choosing between both IPv4 and IPv6 formats. You have the flexibility to subdivide your VPC into smaller sections called subnets. These subnets can either be public or private. Public subnets are designed to allow access to the internet, typically used for web servers or other resources that need to be accessible publicly. In contrast, private subnets are used for backend systems, such as databases or application servers, that do not require direct internet access, thus enhancing security.

Security Features of Amazon VPC

Moreover, Amazon VPC provides multiple layers of security to safeguard your resources. Security groups act as virtual firewalls to control inbound and outbound traffic at the instance level, while Network Access Control Lists (ACLs) offer an additional layer of security at the subnet level. These features enable you to implement strict access controls and protect your environment from unauthorized access.

Isolation and High Availability in Amazon VPC

VPCs are exclusive to your AWS account and are isolated from other VPCs, ensuring that your resources remain secure and private. Each VPC is associated with a single AWS Region but can span multiple Availability Zones within that region. This allows you to design for high availability by distributing resources across different zones, reducing the risk of a single point of failure.

Subnet Configuration in Amazon VPC

Subnets within a VPC are confined to a single Availability Zone and are categorized as either public or private based on their access configurations. Public subnets are connected to the internet through an Internet Gateway, allowing resources within them to communicate with the internet. Private subnets, on the other hand, are not connected directly to the internet, providing a secure environment for sensitive data and applications.

CIDR Blocks and IP Addressing

When you set up a VPC, you assign it an IPv4 Classless Inter-Domain Routing (CIDR) block, which defines the range of IP addresses available for use within the VPC. The size of the CIDR block can vary, with the largest allowable block being /16 and the smallest being /28. It’s important to note that once the CIDR block is assigned, it cannot be changed. Additionally, Amazon VPC supports IPv6, offering a broader range of IP addresses for your resources, which is crucial as the number of connected devices continues to grow. Each subnet you create within the VPC also requires a CIDR block, and these blocks must not overlap, ensuring unique addressing within your network. This careful planning of IP address allocation is essential for maintaining a well-structured and scalable network. In summary, Amazon VPC provides a highly customizable and secure networking environment within the AWS Cloud. It enables you to create and manage isolated virtual networks, configure routing and security settings, and scale your infrastructure with ease. The combination of flexible subnet configurations, multiple security layers, and the ability to use both IPv4 and IPv6 makes Amazon VPC a robust solution for building complex, secure, and scalable cloud architectures.

Virtual Private Cloud (VPC) and IP Address Allocation

When setting up a Virtual Private Cloud (VPC), you assign an IPv4 CIDR block, which defines the range of private IP addresses that can be used within the VPC. The size of this range can vary significantly, from as large as /16 (providing 65,536 IP addresses) to as small as /28 (providing 16 IP addresses). It is crucial to choose the CIDR block carefully since it cannot be modified after the VPC is created. Additionally, you can optionally assign an IPv6 CIDR block to the VPC, which will have different sizing constraints.

Each subnet within a VPC requires its own CIDR block. A subnet’s CIDR block can be identical to the VPC’s CIDR block, resulting in a single subnet that encompasses the entire VPC. Alternatively, you can allocate smaller CIDR blocks to create multiple subnets, as long as they do not overlap. This configuration allows for the separation of network resources within the VPC. It is important to note that no IP addresses can be duplicated within the same VPC.

Reserved IP Addresses in a Subnet

When creating a subnet, AWS reserves five IP addresses within each CIDR block for specific uses, such as network addresses, internal communications, DNS resolution, future use, and network broadcast. For example, a subnet with a CIDR block of 10.0.0.0/24 (256 IP addresses) will have 251 available IP addresses after accounting for the reserved addresses.

Public and Elastic IP Addresses

Instances within a VPC automatically receive a private IP address. Public IP addresses can be manually assigned using an Elastic IP address or automatically through the subnet’s public IP address settings. Elastic IP addresses, which are static public IPv4 addresses, can be associated with instances or network interfaces in a VPC. This allows for flexible management, such as remapping the Elastic IP to different instances in case of a failure. Elastic IPs come with potential additional costs, so it’s recommended to release them when no longer needed.

Elastic Network Interface (ENI)

An Elastic Network Interface (ENI) is a virtual network interface that can be attached or detached from instances within a VPC. The attributes of an ENI, such as security groups and IP addresses, follow it when it is reattached to another instance, enabling seamless redirection of network traffic. Each instance in a VPC comes with a default network interface that is assigned a private IPv4 address.

Route Tables and Networking in a VPC

Route tables in a VPC contain rules that direct network traffic. Each route specifies a destination and a target. By default, every route table includes a local route for communication within the VPC. Subnets must be associated with a route table, with each subnet being able to connect to only one route table at a time. However, multiple subnets can share the same route table.

Internet Gateway and NAT Gateway

An internet gateway facilitates communication between instances in a VPC and the internet. It serves as a target in the VPC route tables for internet-routable traffic and handles network address translation (NAT) for public IPv4 addresses. To allow instances in a private subnet to access the internet without being directly accessible from the internet, a NAT gateway or NAT instance can be used. AWS recommends using NAT gateways for their enhanced availability and bandwidth.

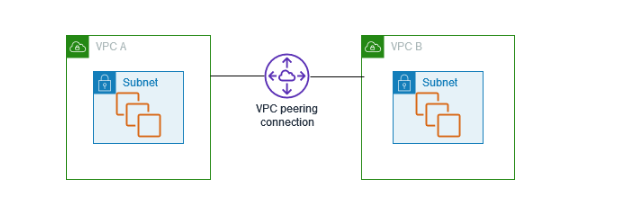

VPC Sharing and Peering

VPC sharing allows subnets to be shared across multiple AWS accounts within the same organization, enabling these accounts to create and manage their resources within shared VPCs. VPC peering, on the other hand, establishes a private networking connection between two VPCs, enabling seamless communication between instances in both VPCs.

A VPC peering connection is a networking connection between two VPCs that enables you to route traffic between them using private IPv4 addresses or IPv6 addresses. Instances in either VPC can communicate with each other as if they are within the same network. You can create a VPC peering connection between your own VPCs, or with a VPC in another AWS account. The VPCs can be in different Regions (also known as an inter-Region VPC peering connection).

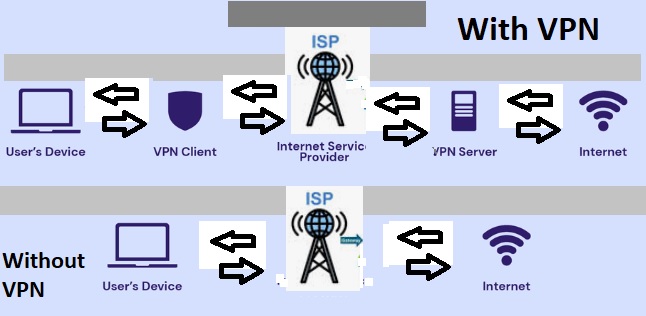

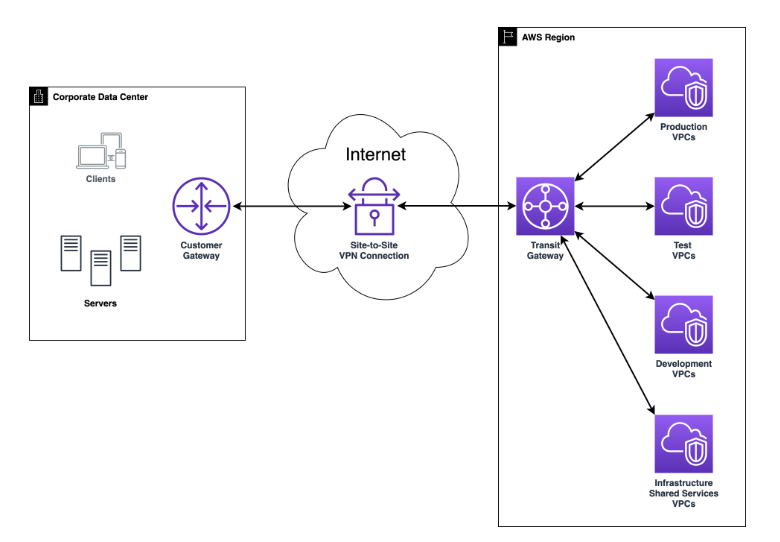

AWS Site-to-Site VPN and Direct Connect

What is VPN?

A VPN, or Virtual Private Network, is a technology that creates a secure, encrypted connection between your device and the internet. This connection allows you to access the internet as if you were in a different location, masking your real IP address and providing privacy and security. VPNs are often used to protect data on public Wi-Fi networks, access region-restricted content, and maintain online anonymity by routing your internet traffic through a server located in another region.

AWS Site-to-Site VPN provides a secure connection between a VPC and a remote network, such as a corporate data center. This connection is established through a virtual private gateway and is managed by configuring route tables and security groups. AWS Direct Connect, on the other hand, offers a dedicated private connection between your network and AWS, providing improved network performance, especially over long distances.

Fig-Site to VPN

VPC Endpoints

A VPC endpoint is a virtual device that allows you to privately connect your VPC to supported AWS services without requiring an internet gateway or NAT device.

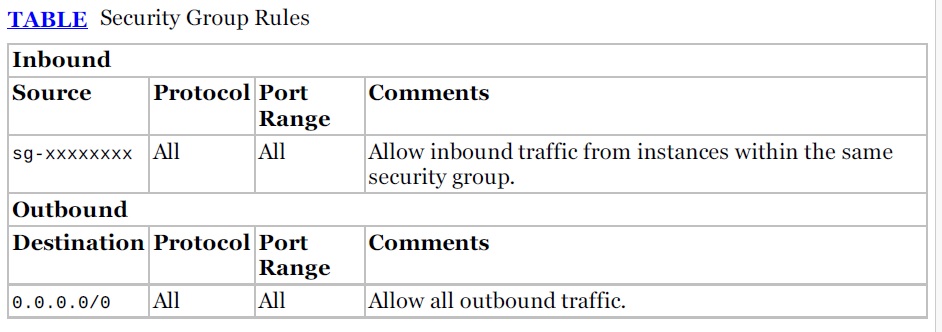

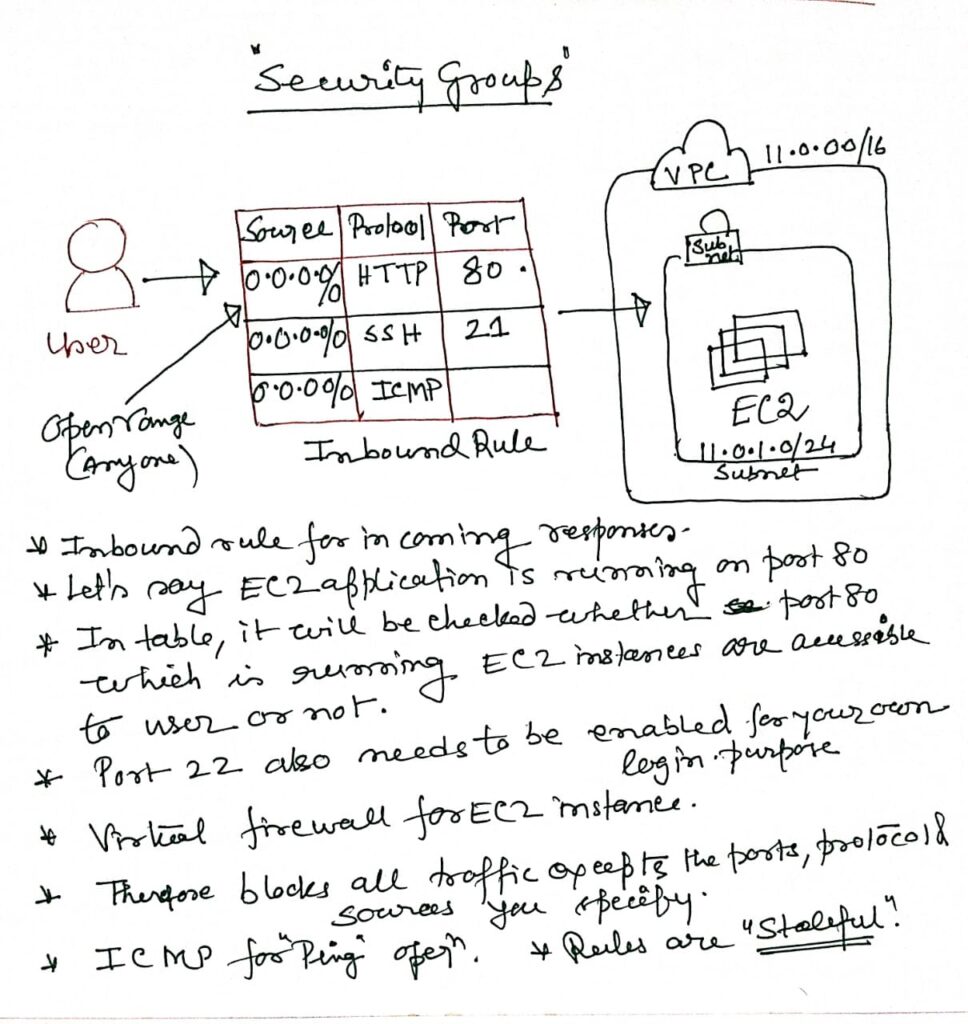

Security Groups Overview:

- Security groups serve as virtual firewalls that manage the inbound and outbound traffic for your instances within a VPC.

- A security group is a virtual stateful firewall that controls inbound and outbound network traffic to AWS resources and Amazon EC2 instances. All Amazon EC2 instances must be launched into a security group. If a security group is not specified at launch, then the instance will be launched into the default security group for the Amazon VPC. The default security group allows communication between all resources within the security group, allows all outbound traffic, and denies all other traffic. You may change the rules for the default security group, but you may not delete the default security group. Table below describes the settings of the default security group.

- In AWS, a stateful firewall, such as AWS Network Firewall or Security Groups, tracks and manages the state of active connections to allow or block traffic based on the context of those connections. This ensures that only valid, established traffic is permitted through the firewall.

Ref : Baron, J., Baz, H., Bixler, T., Gaut, B., Kelly, K. E., Senior, S., & Stamper, J. (2016). AWS certified solutions architect official study guide: associate exam.

For each security group, you add rules that control the inbound traffic to instances and a separate set of rules that control the outbound traffic. For example,below Table describes a security group for web servers.

Here are the important points to understand about security groups for the exam:

- You can create up to 500 security groups for each Amazon VPC.

- You can add up to 50 inbound and 50 outbound rules to each security group. If you need to apply more than 100 rules to an instance, you can associate up to five security groups with each network interface.

- You can specify allow rules, but not deny rules. This is an important difference between security groups and ACLs(Access Control List.).

- You can specify separate rules for inbound and outbound traffic.

- By default, no inbound traffic is allowed until you add inbound rules to the security group.

- By default, new security groups have an outbound rule that allows all outbound traffic.

- You can remove the rule and add outbound rules that allow specific outbound traffic only.

- Security groups are stateful. This means that responses to allowed inbound traffic are allowed to flow outbound regardless of outbound rules and vice versa. This is an important difference between security groups and network ACLs.

- Instances associated with the same security group can’t talk to each other unless you add rules allowing it (with the exception being the default security group).

- You can change the security groups with which an instance is associated after launch, and the changes will take effect immediately.

- By default, security groups block all incoming traffic while allowing all outgoing traffic.

- These groups are inherently stateful, meaning they maintain connection information across sessions.

- When you first establish a security group, it lacks any inbound rules. This means no external traffic can reach your instance until you explicitly add rules to allow it. Conversely, there is a default rule that permits all outbound traffic, which you can modify or replace with more specific rules. If you choose not to define any outbound rules, all outgoing traffic from your instance will be blocked.

- The stateful nature of security groups ensures that any outgoing traffic originating from your instance automatically allows the corresponding incoming response traffic, regardless of the existing inbound rules. Similarly, if incoming traffic is permitted, the corresponding outbound response traffic is allowed, regardless of the outbound rules.

Custom Security Groups:

- When configuring a custom security group, you have the ability to define rules that permit traffic. However, it’s important to note that you cannot create rules to explicitly block traffic. All the rules within the security group are evaluated to determine whether to allow traffic.

- Network Access Control Lists (Network ACLs) offer an additional layer of security within your Amazon VPC, functioning as subnet-level firewalls that control inbound and outbound traffic. These ACLs are useful for implementing broader security policies across multiple instances within a subnet.

- Each subnet within your VPC must be associated with a Network ACL. If a subnet isn’t manually linked to a specific Network ACL, it will automatically be connected to the default Network ACL. Although you can link multiple subnets to a single Network ACL, each subnet can only be associated with one Network ACL at any given time. When you associate a new Network ACL with a subnet, any previous association is overridden.

- Network ACLs contain separate rules for inbound and outbound traffic, and each rule can either allow or deny traffic. By default, your VPC comes with a modifiable Network ACL that allows all inbound and outbound IPv4 traffic and, if applicable, IPv6 traffic.

- Unlike security groups, Network ACLs are stateless, meaning they do not retain information about traffic sessions after the request has been processed.

- You can create custom Network ACLs and associate them with your subnets. By default, custom Network ACLs deny all traffic until you add specific rules to allow it.

- Network ACLs evaluate rules in a sequential order based on their assigned number, starting with the lowest. The rules determine whether traffic is permitted to enter or exit any associated subnet. The highest rule number that can be used is 32,766. It is recommended to create rules in numerical increments (such as increments of 10 or 100) to leave space for future rule additions.

- Custom Network ACLs, by default, deny all inbound and outbound traffic until rules are specified.

- Both allow and deny rules can be established within a Network ACL.

- Rules are evaluated in the order of their assigned numbers, beginning with the lowest.

Comparing Security Groups and Network ACLs:

Here is a summary of key differences:

- Security groups operate at the instance level, providing protection for individual instances, while Network ACLs operate at the subnet level, controlling traffic for multiple instances within a subnet.

- Security groups only support rules that allow traffic, while Network ACLs support both allow and deny rules.

- Security groups are stateful, tracking the state of traffic and automatically permitting return traffic, while Network ACLs are stateless and require explicit rules for both incoming and outgoing traffic.

- In security groups, all rules are evaluated before making a decision on traffic. In contrast, Network ACLs evaluate rules in the order of their numbers to determine whether traffic is allowed or denied.

VPC Design Scenario:

Scenario: Imagine you are running a small business, with a website hosted on an Amazon EC2 instance. Your customers’ data is stored in a backend database that must remain private. You plan to use Amazon VPC to create a secure and reliable network infrastructure that meets the following criteria:

- The web server and database server should be placed in different subnets.

- The network should start with the IP address 10.0.0.0, with each subnet having 256 available IPv4 addresses.

- The web server should always be accessible to customers.

- The database server should have internet access for updates, while maintaining security.

- The architecture must ensure high availability and incorporate at least one custom firewall layer for added protection.

Design Task: Your goal is to design a VPC that fulfills the following requirements:

- Separate subnets for the web server and the database server.

- The network must start at the address 10.0.0.0, with each subnet containing 256 IPv4 addresses.

- Ensure that customers can always access the web server without interruption.

- Allow the database server to connect to the internet for necessary updates, while keeping the data secure.

- Ensure the architecture is highly available and includes at least one custom firewall layer to enhance security.

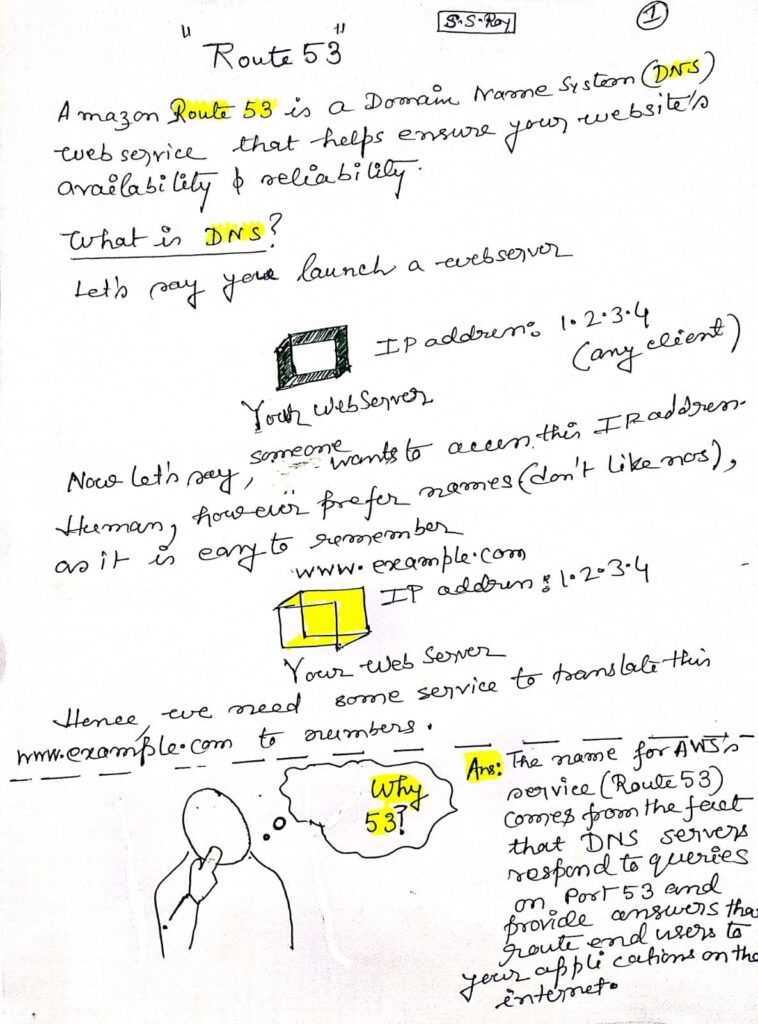

AWS Route 53

Overview

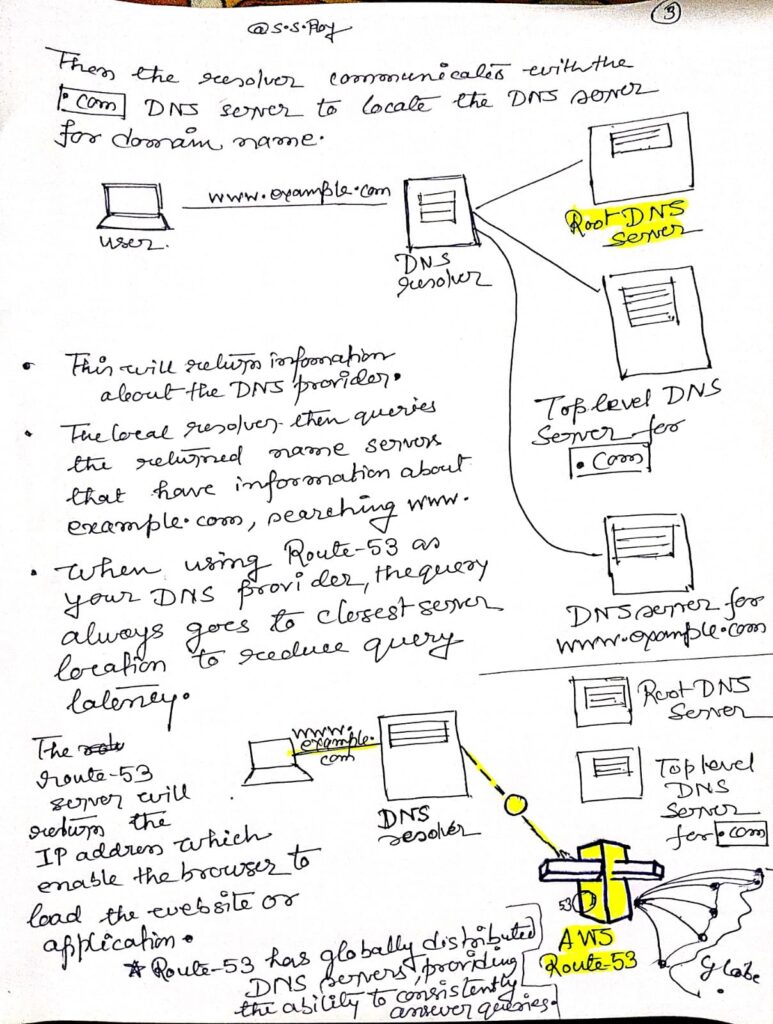

Amazon Route 53 is a highly available and scalable Domain Name System (DNS) web service provided by AWS. It plays a crucial role in routing users to internet applications by converting human-readable domain names (like www.example.com) into machine-friendly IP addresses (e.g., 192.0.2.1). This process is essential for enabling communication between devices across the internet.

What is DNS?

The Domain Name System (DNS) is a hierarchical system that translates human-readable domain names (like www.example.com) into IP addresses (like 192.0.2.1) that computers use to identify each other on the network. DNS functions like a phone book for the internet, allowing users to access websites using easy-to-remember names rather than numeric IP addresses.

Compliance

Route 53 is fully compliant with both IPv4 and IPv6, making it versatile and future-proof, capable of supporting modern and legacy internet protocols alike.

Connectivity

The service connects user requests to AWS-hosted resources, such as EC2 instances, Elastic Load Balancers, and S3 buckets. Additionally, it can route traffic to external infrastructure outside of AWS, offering flexibility in managing both cloud and on-premises environments.

Health Checks

Route 53 includes DNS health checks, which monitor the health of resources in real-time. By setting up these checks, you can ensure that traffic is only routed to endpoints that are operational, thereby maintaining high availability for your applications.

Traffic Flow

With its traffic flow feature, Amazon Route 53 allows you to manage global traffic efficiently. This feature supports multiple routing types and can be combined with DNS failover to create low-latency, fault-tolerant architectures. The service includes a simple visual editor, making it easy to design how traffic is directed across different AWS regions or global endpoints.

Domain Registration

Route 53 also offers domain name registration services. This capability simplifies the process of acquiring and managing domain names directly through AWS. Once registered, Route 53 automatically configures DNS settings, making domain management straightforward.

Routing Policies

Amazon Route 53 supports various routing policies to determine how it responds to DNS queries:

- Simple Routing: Used for routing traffic to a single resource, such as a web server.

- Weighted Routing: Allows you to distribute traffic across multiple resources based on assigned weights, which is useful for A/B testing. For example, if one resource is assigned a weight of 3 and another a weight of 1, 75% of the traffic will be directed to the former, and 25% to the latter.

- Latency Routing: Routes traffic to the AWS region that offers the lowest latency, ensuring the fastest possible user experience by directing traffic to the most responsive server.

- Geolocation Routing: Routes traffic based on the geographic location of the user. This is particularly useful for delivering localized content and for complying with regional content distribution regulations.

- Geoproximity Routing: Routes traffic based on the location of resources and can adjust traffic distribution between resources in different locations.

- Failover Routing: Enables active-passive failover configurations. In the event of an outage, Route 53 can automatically redirect traffic to a backup location, ensuring continuous service availability.

- Multivalue Answer Routing: Responds to DNS queries with multiple IP addresses, selecting up to eight healthy records at random. While not a replacement for a traditional load balancer, this method enhances availability by distributing traffic among multiple endpoints.

Multi-Region

One significant advantage of Route 53 is its support for multi-region deployment. This feature ensures that users are directed to the Elastic Load Balancer closest to their location, minimizing latency and improving overall user experience. The benefits include efficient latency-based routing and load balancing across different AWS regions.

Availability

Amazon Route 53 is instrumental in enhancing the availability of applications running on AWS. By enabling backup and failover configurations, the service ensures that applications remain accessible even in the event of regional failures. Additionally, Route 53 supports the creation of highly available multi-region architectures, further bolstering reliability.

DNS Failover

DNS failover is a critical feature in multi-tiered web application architectures. In a typical setup, Route 53 routes traffic to a load balancer, which then distributes the load across a fleet of EC2 instances. This architecture ensures that traffic is managed efficiently and that failover processes are seamless.

High Availability Tasks

To maintain high availability, you can perform the following tasks with Route 53:

- Create DNS Records: Establish two DNS records for the Canonical Name Record (CNAME)

wwwwith a failover routing policy. The primary record should point to your web application’s load balancer, while the secondary should point to a static Amazon S3 website as a backup. - Configure Health Checks: Implement Route 53 health checks to monitor the status of your primary application. If the primary endpoint fails, Route 53 will automatically trigger failover to the backup site, ensuring uninterrupted service.

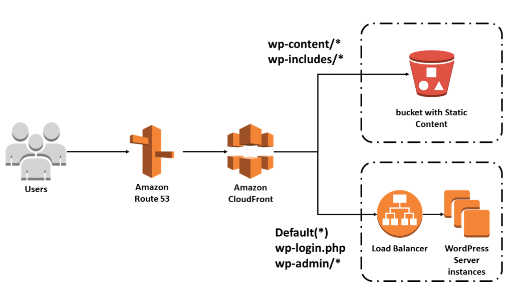

Amazon CloudFront

Introduction

Amazon CloudFront is a powerful content delivery network (CDN) service offered by AWS, designed to accelerate the delivery of data, video, applications, and APIs to users globally. By leveraging a vast network of edge locations and regional edge caches, CloudFront ensures that content is delivered with low latency and high transfer speeds, offering a secure, scalable, and cost-effective solution for content delivery. Amazon CloudFront is a high-speed content delivery network (CDN) designed to efficiently and securely distribute data, videos, applications, and APIs worldwide, ensuring low latency and rapid data transfer. It offers a user-friendly environment tailored for developers. By utilizing a vast network of global edge locations and regional edge caches, CloudFront delivers content directly to end users. Unlike conventional content delivery solutions, CloudFront provides immediate access to enhanced performance without the need for long-term contracts, steep costs, or minimum usage requirements. As with other AWS services, it follows a flexible, pay-as-you-go pricing model. Amazon CloudFront distributes content via a global network of data centers referred to as edge locations. When a user requests content provided through CloudFront, they are directed to the edge location offering the lowest latency to ensure optimal performance. These edge locations are optimized to rapidly deliver frequently accessed content to users. As certain content decreases in popularity, edge locations may remove it to accommodate newer, more popular data. For less frequently accessed content, CloudFront utilizes Regional edge caches, strategically placed around the globe near your audience. These caches serve as intermediaries between your origin server and the edge locations, featuring larger storage capacities than standard edge locations, allowing content to be retained longer. This proximity minimizes the need to retrieve data from the origin server, resulting in enhanced performance for users. To learn more about how CloudFront operates, refer to the “How CloudFront Delivers Content” section in the AWS Documentation. Amazon CloudFront provides the following benefits:

•Fast and global – Amazon CloudFront is massively scaled and globally distributed. To deliver content to end users with low latency, Amazon CloudFront uses a global network that consists of edge locations and regional caches.

•Security at the edge – Amazon CloudFront provides both network-level and application-level protection. Your traffic and applications benefit through various built-in protections, such as AWS Shield Standard, at no additional cost. You can also use configurable features, such as AWS Certificate Manager (ACM), to create and manage custom Secure Sockets Layer (SSL) certificates at no extra cost.

•Highly programmable – Amazon CloudFront features can be customized for specific application requirements. It integrates with Lambda@Edge so that you can run custom code across AWS locations worldwide, which enables you to move complex application logic closer to users to improve responsiveness. The CDN also supports integrations with other tools and automation interfaces for DevOps. It offers continuous integration and continuous delivery (CI/CD) environments.

•Deeply integrated with AWS – Amazon CloudFront is integrated with AWS, with both physical locations that are directly connected to the AWS Global Infrastructure and other AWS services. You can use APIs or the AWS Management Console to programmatically configure all features in the CDN.

•Cost-effective – Amazon CloudFront is cost-effective because it has no minimum commitments and charges you only for what you use. Compared to self-hosting, Amazon CloudFront avoids the expense and complexity of operating a network of cache servers in multiple sites across the internet. It eliminates the need to overprovision capacity to serve potential spikes in traffic. Amazon CloudFront also uses techniques like collapsing simultaneous viewer requests at an edge location for the same file into a single request to your origin server. The result is reduced load on your origin servers and reduced need to scale your origin infrastructure, which can result in further cost savings. If you use AWS origins such as Amazon Simple Storage Service (Amazon S3) or Elastic Load Balancing, you pay only for storage costs, not for any data transferred between these services and CloudFront.

Amazon CloudFront provides the following benefits:

•Fast and global – Amazon CloudFront is massively scaled and globally distributed. To deliver content to end users with low latency, Amazon CloudFront uses a global network that consists of edge locations and regional caches.

Security at the edge – Amazon CloudFront provides both network-level and application-level protection. Your traffic and applications benefit through various built-in protections, such as AWS Shield Standard, at no additional cost. You can also use configurable features, such as AWS Certificate Manager (ACM), to create and manage custom Secure Sockets Layer (SSL) certificates at no extra cost.

•Highly programmable – Amazon CloudFront features can be customized for specific application requirements. It integrates with Lambda@Edge so that you can run custom code across AWS locations worldwide, which enables you to move complex application logic closer to users to improve responsiveness. The CDN also supports integrations with other tools and automation interfaces for DevOps. It offers continuous integration and continuous delivery (CI/CD) environments.

•Deeply integrated with AWS – Amazon CloudFront is integrated with AWS, with both physical locations that are directly connected to the AWS Global Infrastructure and other AWS services. You can use APIs or the AWS Management Console to programmatically configure all features in the CDN.

•Cost-effective – Amazon CloudFront is cost-effective because it has no minimum commitments and charges you only for what you use. Compared to self-hosting, Amazon CloudFront avoids the expense and complexity of operating a network of cache servers in multiple sites across the internet. It eliminates the need to overprovision capacity to serve potential spikes in traffic. Amazon CloudFront also uses techniques like collapsing simultaneous viewer requests at an edge location for the same file into a single request to your origin server. The result is reduced load on your origin servers and reduced need to scale your origin infrastructure, which can result in further cost savings. If you use AWS origins such as Amazon Simple Storage Service (Amazon S3) or Elastic Load Balancing, you pay only for storage costs, not for any data transferred between these services and CloudFront.

Amazon CloudFront costs are calculated based on actual usage in four categories:

Data Transfer Out – Charges apply for the amount of data transferred from CloudFront edge locations to the internet or back to your origin (whether AWS or external servers). The volume is measured in GB, with costs calculated separately for different geographic regions using tiered pricing. If AWS services are used as origins, separate charges will apply for storage and compute usage.

HTTP(S) Requests – You are billed based on the number of HTTP(S) requests made to CloudFront for content delivery.

Invalidation Requests – Fees are applied for each path in an invalidation request. Each path represents the URL (or multiple URLs if a wildcard is used) for the object you wish to remove from the CloudFront cache. The first 1,000 invalidation paths per month are free, with charges incurred for any additional paths beyond this limit.

Dedicated IP Custom SSL – A fee of $600 per month is charged for each custom SSL certificate tied to one or more CloudFront distributions using the Dedicated IP version of custom SSL support. This fee is prorated based on usage. For instance, if a custom SSL certificate is used for 24 hours in June, the fee for June would be ($600 / 30 days) * 1 day = $20.

For up-to-date pricing details, refer to the Amazon CloudFront pricing page.

Networking Basics

Networking is fundamentally about sharing information between connected resources. In the context of AWS, networking typically involves Amazon Virtual Private Cloud (VPC), which allows you to create a logically isolated network within the AWS cloud. AWS provides various options to connect your VPC to the internet, remote networks, other VPCs, and AWS services.

Content Delivery

Content delivery is a key aspect of networking. Whether you are streaming a movie or browsing a website, your request travels through numerous networks before reaching the origin server, where the definitive version of the content is stored. The performance of this process is influenced by the number of network hops and the distance the request must travel. Geographic differences in network latency further complicate this process, making efficient content delivery crucial.

CDN Overview

A Content Delivery Network (CDN) is a globally distributed system of caching servers that cache copies of commonly requested files, such as HTML, CSS, JavaScript, and images, hosted on the origin server. CDNs reduce latency by delivering a local copy of the requested content from the nearest cache edge or Point of Presence (PoP) to the user, ensuring faster and more reliable content delivery.

Dynamic Content

In addition to static content, CDNs can deliver dynamic content, which is unique to each requester and typically not cacheable. By establishing secure connections closer to the user, CDNs enhance performance and scalability for dynamic content delivery. Even if the content must be retrieved from the origin, the proximity of the CDN to the origin speeds up the process. CDNs can also efficiently handle form data, images, and text, benefiting from low-latency connections and the proxy behavior of the PoPs.

Amazon CloudFront

Amazon CloudFront is a fast, secure, and developer-friendly CDN service that delivers content globally with low latency and high transfer speeds. It differentiates itself from traditional CDNs by offering a self-service model with pay-as-you-go pricing, eliminating the need for negotiated contracts, high prices, or minimum fees. CloudFront operates through a global network of edge locations and regional edge caches, ensuring optimal content delivery.

Edge Locations

CloudFront delivers content via a worldwide network of edge locations. When a user requests content, they are routed to the nearest edge location that offers the lowest latency, ensuring the best possible performance. These edge locations are optimized to serve popular content quickly to users.

Regional Caches

As content becomes less popular, individual edge locations might remove it to free up space for more frequently requested data. To handle less popular content efficiently, CloudFront utilizes Regional Edge Caches. These caches, located globally and closer to users, have larger storage capacities than individual edge locations, allowing them to retain content longer. This reduces the need to retrieve content from the origin server, improving overall performance for users.

Content Delivery Process

The process of content delivery through CloudFront involves several steps. When a user requests a file, CloudFront routes the request to the nearest edge location. If the content is available in the cache, it is delivered to the user. If not, CloudFront retrieves the content from the origin server or a regional edge cache and delivers it to the user, caching it for future requests.

Global Reach

CloudFront’s global network is massively scaled and distributed, enabling fast content delivery to users worldwide. By strategically placing edge locations and regional caches around the globe, CloudFront minimizes latency and ensures that content is always delivered efficiently.

Security Features

Security is a critical component of CloudFront. It provides both network-level and application-level protection, ensuring that your traffic and applications are secure. Built-in protections like AWS Shield Standard are included at no additional cost. Additionally, CloudFront integrates with AWS Certificate Manager (ACM), allowing you to create and manage custom Secure Sockets Layer (SSL) certificates at no extra charge.

Pricing Structure

Amazon CloudFront’s pricing is based on actual usage, covering four main areas:

- Data Transfer Out: Charges are incurred based on the volume of data transferred from CloudFront edge locations to the internet or origin servers. Data transfer is calculated separately for different geographic regions, with pricing tiers applied accordingly. If you use AWS services as the origin for your files, you will also be charged for the use of those services.

- HTTP(S) Requests: You are charged for the number of HTTP(S) requests made to CloudFront for your content. This pricing model is straightforward and scales with the volume of traffic your content receives.

- Invalidation Requests: CloudFront allows you to invalidate specific objects in the cache by submitting invalidation requests. You can invalidate up to 1,000 paths per month at no additional cost. Beyond this limit, charges apply for each invalidation request.

- Dedicated IP Custom SSL: If you opt for a custom SSL certificate with Dedicated IP support, you will be charged $600 per month for each certificate. This fee is prorated by the hour, meaning you only pay for the time the certificate is in use.

Additional Features

CloudFront offers a range of additional features to enhance content delivery and security. These include support for Web Application Firewall (WAF) to protect against common web exploits, integration with Lambda@Edge for running custom code closer to users, and real-time metrics for monitoring and optimizing performance.

Amazon CloudFront is an essential tool for any organization looking to optimize content delivery on a global scale. With its extensive network of edge locations, robust security features, and flexible pricing, CloudFront provides a powerful and cost-effective solution for delivering both static and dynamic content with low latency and high performance. Whether you’re streaming video, delivering software updates, or running a high-traffic website, CloudFront ensures your content reaches users quickly and securely, no matter where they are in the world.